Lakebase (OLTP)

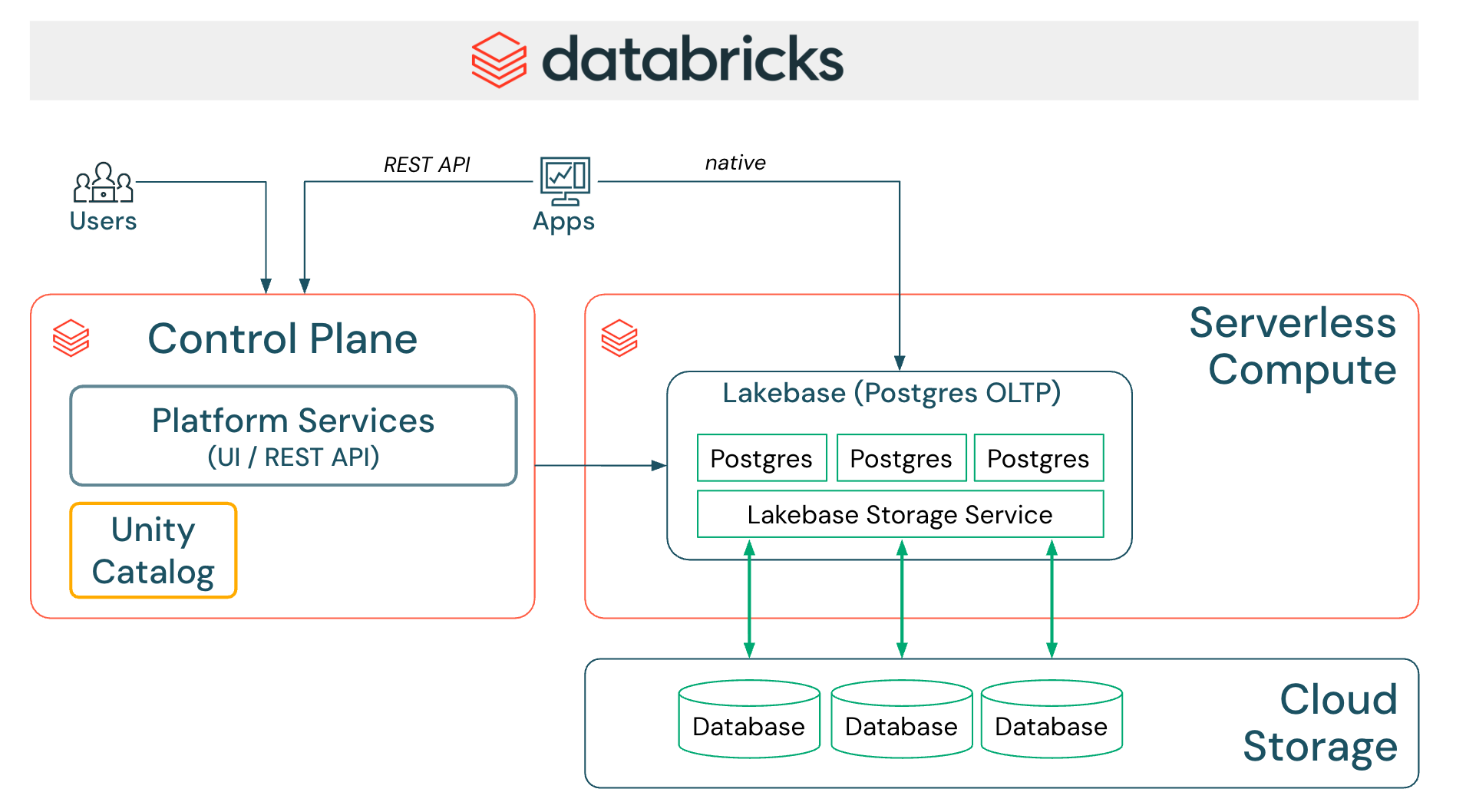

Lakebase brings PostgreSQL-compatible OLTP capabilities to the Databricks Lakehouse, enabling transactional workloads alongside analytics.

Key capabilities

- PostgreSQL Compatibility - Use standard PostgreSQL clients and drivers

- Unity Catalog Integration - Full governance and lineage tracking

- Serverless Deployment - Managed infrastructure with automatic scaling

Documentation: Lakebase

Integration requirements

Partners integrating with Lakebase must meet the following requirements:

| Requirement | Description |

|---|---|

| Supported Connectors | Use JDBC, psql, psycopg2/3, or SQLAlchemy |

| User-Agent Telemetry | Implement application_name with the connector |

| OAuth Authentication | OAuth without manual intervention (required) |

| Named Connector | Dedicated Lakebase connector in your product |

| Documentation | Partner documentation for joint customers |

Authentication methods

OAuth authentication (preferred)

PostgreSQL does not natively support OAuth; however, Lakebase supports BYOT (Bring Your Own Token). The application/client must manage the full token lifecycle (generation, refresh, and handoff).

The partner application must:

- Obtain the OAuth token from Databricks via SDK or API

- Pass the token to the PostgreSQL connector during connection setup

- Handle token refresh as part of the connection logic

In practice, the partner application/client must implement OAuth flows similar to IDP-based token generation and hand them off to Lakebase (Token Federation logic).

Role-based authentication (interim)

Use Postgres-native roles and grants as an interim solution.

Create a Postgres-native role:

CREATE ROLE lbaseadmin1 LOGIN PASSWORD '<your-secure-password>';

Grant permissions to the role:

GRANT USAGE ON SCHEMA my_schema TO lbaseadmin1;

GRANT SELECT ON ALL TABLES IN SCHEMA my_schema TO lbaseadmin1;

-- For future tables created in the schema

ALTER DEFAULT PRIVILEGES IN SCHEMA my_schema GRANT SELECT ON TABLES TO lbaseadmin1;

Bulk grant across all non-system schemas:

DO $$

DECLARE r RECORD;

BEGIN

FOR r IN

SELECT schema_name

FROM information_schema.schemata

WHERE schema_name NOT IN ('pg_catalog','information_schema','pg_toast','__db_system')

LOOP

BEGIN

EXECUTE format('GRANT USAGE ON SCHEMA %I TO lbaseadmin1;', r.schema_name);

EXECUTE format('GRANT SELECT ON ALL TABLES IN SCHEMA %I TO lbaseadmin1;', r.schema_name);

EXECUTE format('ALTER DEFAULT PRIVILEGES IN SCHEMA %I GRANT SELECT ON TABLES TO lbaseadmin1;', r.schema_name);

EXCEPTION WHEN insufficient_privilege THEN

RAISE NOTICE 'Skipped schema %', r.schema_name;

END;

END LOOP;

END

$$;

Named connector requirements

Partners should create a dedicated Lakebase named connector for two reasons:

OAuth flows

The partner product UI must request the end user to provide details for either:

- Client Credentials flow (M2M / service principal): Client ID and secret

- Interactive flow (U2M): OAuth app details

JDBC configuration for telemetry

To ensure application_name (UserAgent) is captured correctly in downstream telemetry, the connector should include:

PGProperty.ASSUME_MIN_SERVER_VERSION.set(props, "9.1");

This configuration is specific to Databricks Lakebase and ensures accurate telemetry without affecting integrations with other Postgres-compatible systems.

Lakebase operational topics

Differentiating Lakebase from standard PostgreSQL

If the partner application needs to differentiate Lakebase from standard PostgreSQL to choose a different code path:

Option 1: Check shared libraries

Run SHOW shared_preload_libraries and check for neon and databricks_auth in the output.

Option 2: Check hostname patterns

AWS: instance-XXXXXXXX.database.cloud.databricks.com

Azure: instance-XXXXXXX.database.azuredatabricks.net

Sync Lakehouse to Lakebase

Use SYNC TABLE to move data from the Lakehouse into Lakebase tables for OLTP-serving workloads.

Key points:

- A Primary Key is required to identify unique rows in the target Lakebase table

- After syncing, the system creates a corresponding foreign catalog in the Lakehouse referencing the Lakebase destination

- Synced objects appear as foreign tables in the Lakehouse and can be queried like any other foreign source

- Synced data relies on declarative pipelines—partners can automate pipeline creation and management via the Databricks API or SDK

- Foreign catalogs created through sync are fully integrated into the Lakehouse governance model, enabling unified access control, lineage tracking, and auditing

Sync Lakebase to Lakehouse

Importing from Lakebase to the Lakehouse is currently in Private Preview. Partners may request access to explore this capability.

Documentation: SYNC TABLE

Use cases

- Application Backends - Power transactional applications with Lakehouse data

- Operational Analytics - Combine OLTP and OLAP in a single platform

- Data Apps - Build interactive data applications with real-time updates

What's next

- Learn about telemetry and attribution for Lakebase

- Review the integration requirements for foundational guidance

- Explore data transformation patterns