Migration Assessment Report

This document describes the Assessment Report generated from the UCX tools. The main assessment report includes dashlets, widgets and details of the assessment findings and common recommendations made based on the Assessment Finding (AF) Index entry.

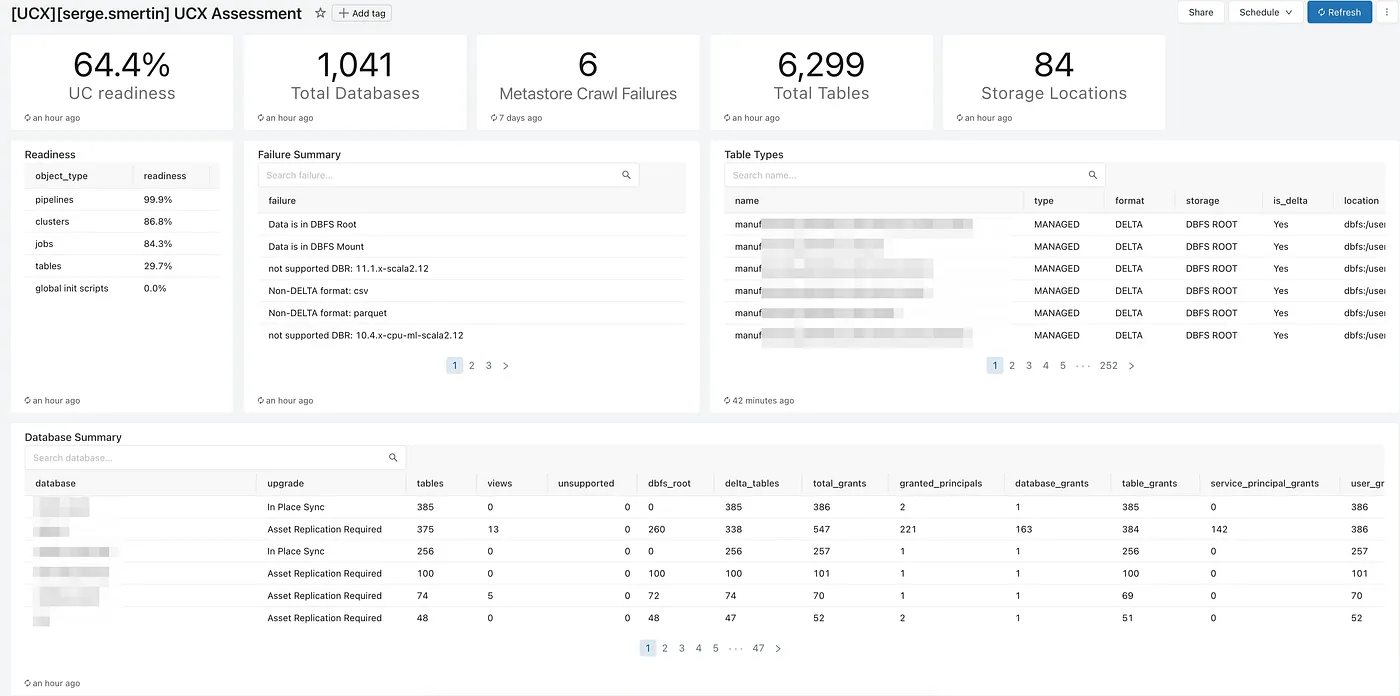

Assessment Report Summary

The Assessment Report (Main) is the output of the Databricks Labs UCX assessment workflow. This report queries the $inventory database (e.g. ucx) and summarizes the findings of the assessment. The link to the Assessment Report (Main) can be found in your home folder, under .ucx in the README.py file. The user may also directly navigate to the Assessment report by clicking on Dashboards icon on the left to find the Dashboard.

Assessment Widgets

Readiness

This is an overall summary of readiness detailed in the Readiness dashlet. This value is based on the ratio of findings divided by the total number of assets scanned.

Total Databases

The total number of hive_metastore databases found during the assessment.

Metastore Crawl Failures

Total number of failures encountered by the crawler while extracting metadata from the Hive Metastore and REST APIs.

Total Tables

Total number of hive metastore tables discovered

Storage Locations

Total number of identified storage locations based on scanning Hive Metastore tables and schemas

Assessment Widgets

Assessment widgets query tables in the $inventory database and summarize or detail out findings.

The second row of the report starts with "Job Count", "Readiness", "Assessment Summary", "Table counts by storage" and "Table counts by schema and format"

Readiness

This is a rough summary of the workspace readiness to run Unity Catalog governed workloads. Each line item is the percent of compatible items divided by the total items in the class.

Assessment Summary

This is a summary count, per finding type of all of the findings identified during the assessment workflow. The assessment summary will help identify areas that need focus (e.g. Tables on DBFS or Clusters that need DBR upgrades)

Table counts by storage

This is a summary count of Hive Metastore tables, per storage type (DBFS Root, DBFS Mount, Cloud Storage (referred as External)). This also gives a summary count of tables using storage types which are unsupported (such as WASB or ADL in Azure) in Unity Catalog. Count of tables created using Databricks Demo Datasets are also identified here

Table counts by schema and format

This is a summary count by Hive Metastore (HMS) table formats (Delta and Non Delta) for each HMS schema

The third row continues with "Database Summary"

Database Summary

This is a Hive Metastore based Database by Database assessment summary along with an upgrade strategy.

In Place Sync indicates that the SYNC command can be used to copy the metadata into a Unity Catalog Catalog.

And the fourth row contains "External Locations" and "Mount Points"

External Locations

Tables were scanned for LOCATION attributes and that list was distilled down to External Locations. In Unity Catalog, create a STORAGE CREDENTIAL that can access the External Locations, then define Unity Catalog EXTERNAL LOCATIONs for these items.

Mount Points

Mount points are popular means to provide access to external buckets / storage accounts. A more secure form in Unity Catalog are EXTERNAL LOCATIONs and VOLUMES. EXTERNAL LOCATIONs are the basis for EXTERNAL Tables, Schemas, Catalogs and VOLUMES. VOLUMES are the basis for managing files. The recommendation is to migrate Mountpoints to Either EXTERNAL LOCATIONS or VOLUMEs. The Unity Catalog Create External Location UI will prompt for mount points to assist in creating EXTERNAL LOCATIONS.

Unfortunately, as of January 2024, cross cloud external locations are not supported. Databricks to Databricks delta sharing may assist in upgrading cross cloud mounts.

The next row contains the "Table Types" widget

Table Types

This widget is a detailed list of each table, it's format, storage type, location property and if a DBFS table approximate table size. Upgrade strategies include:

- DEEP CLONE or CTAS for DBFS ROOT tables

- SYNC for DELTA tables (managed or external) for tables stored on a non-DBFS root (Mount point or direct cloud storage path)

- Managed non DELTA tables need to be upgraded to Unity Catalog by either:

- Use CTAS to convert targeting the Unity Catalog catalog, schema and table name

- Moved to an EXTERNAL LOCATION and create an EXTERNAL table in Unity Catalog.

The following row includes "Incompatible Clusters and "Incompatible Jobs"

Incompatible Clusters

This widget is a list of findings (reasons) and clusters that may need upgrading. See Assessment Finding Index (below) for specific recommendations.

Incompatible Jobs

This is a list of findings (reasons) and jobs that may need upgrading. See Assessment Findings Index for more information.

The final row includes "Incompatible Delta Live Tables" and "Incompatible Global Init Scripts"

Incompatible Object Privileges

These are permissions on objects that are not supported by Unit Catalog.

Incompatible Delta Live Tables

These are Delta Live Table jobs that may be incompatible with Unity Catalog.

Incompatible Global Init Scripts

These are Global Init Scripts that are incompatible with Unity Catalog compute. As a reminder, global init scripts need to be on secure storage (Volumes or a Cloud Storage account and not DBFS).