DLT-META

Project Overview

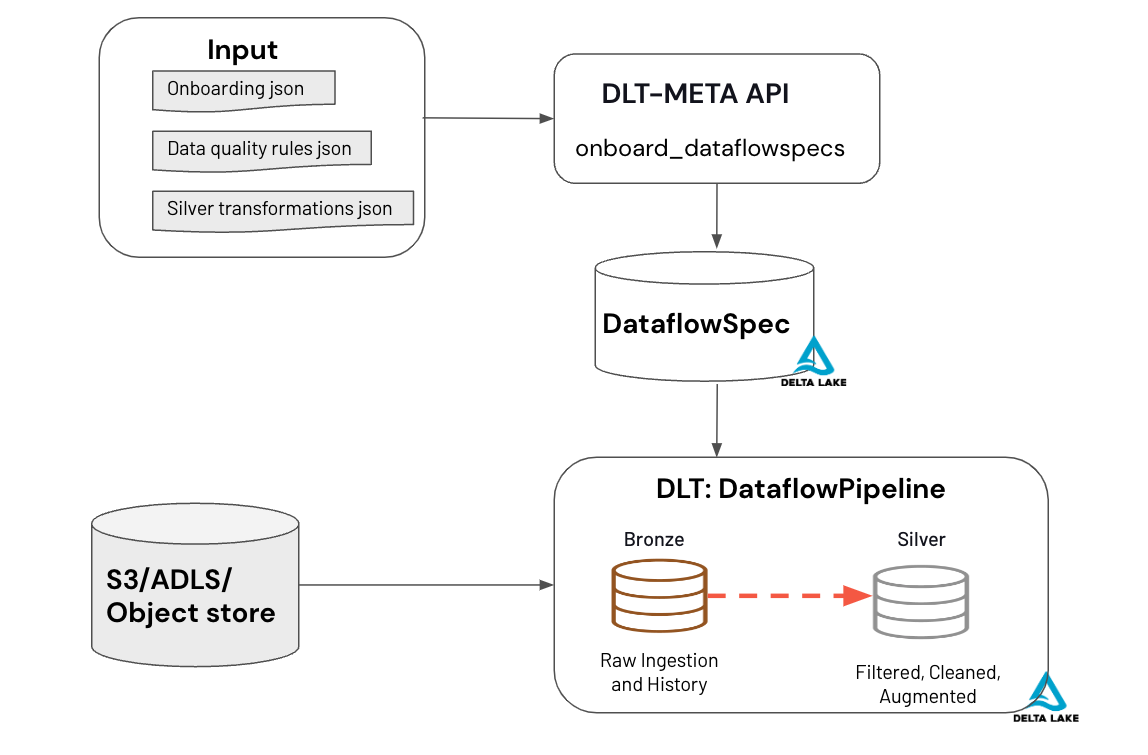

DLT-META is a metadata-driven framework designed to work with Lakeflow Declarative Pipelines. This framework enables the automation of bronze and silver data pipelines by leveraging metadata recorded in an onboarding JSON file. This file, known as the Dataflowspec, serves as the data flow specification, detailing the source and target metadata required for the pipelines.

In practice, a single generic pipeline reads the Dataflowspec and uses it to orchestrate and run the necessary data processing workloads. This approach streamlines the development and management of data pipelines, allowing for a more efficient and scalable data processing workflow

Lakeflow Declarative Pipelines and DLT-META are designed to complement each other. Lakeflow Declarative Pipelines provide a declarative, intent-driven foundation for building and managing data workflows, while DLT-META adds a powerful configuration-driven layer that automates and scales pipeline creation. By combining these approaches, teams can move beyond manual coding to achieve true enterprise-level agility, governance, and efficiency, templatizing and automating pipelines for any scale of modern data-driven business

DLT-META components:

Metadata Interface

- Capture input/output metadata in onboarding file

- Capture Data Quality Rules

- Capture processing logic as sql in Silver transformation file

Generic Lakeflow Declarative pipeline

- Apply appropriate readers based on input metadata

- Apply data quality rules with Lakeflow Declarative Pipelines expectations

- Apply CDC apply changes if specified in metadata

- Builds Lakeflow Declarative Pipelines graph based on input/output metadata

- Launch Lakeflow Declarative Pipelines pipeline

High-Level Solution overview:

How does DLT-META work?

Onboarding Job

- Option#1: DLT-META CLI

- Option#2: Manual Job

- option#3: Databricks Notebook

Dataflow Lakeflow Declarative Pipeline

- Option#1: DLT-META CLI

- Option#2: DLT-META MANUAL

DLT-META Lakeflow Declarative Pipelines Features support

| Features | DLT-META Support |

|---|---|

| Input data sources | Autoloader, Delta, Eventhub, Kafka, snapshot |

| Medallion architecture layers | Bronze, Silver |

| Custom transformations | Bronze, Silver layer accepts custom functions |

| Data Quality Expecations Support | Bronze, Silver layer |

| Quarantine table support | Bronze layer |

| create_auto_cdc_flow API support | Bronze, Silver layer |

| create_auto_cdc_from_snapshot_flow API support | Bronze layer |

| append_flow API support | Bronze layer |

| Liquid cluster support | Bronze, Bronze Quarantine, Silver tables |

| DLT-META CLI | databricks labs dlt-meta onboard, databricks labs dlt-meta deploy |

| Bronze and Silver pipeline chaining | Deploy dlt-meta pipeline with layer=bronze_silver option using default publishing mode |

| create_sink API support | Supported formats:external delta table , kafka Bronze, Silver layers |

| Databricks Asset Bundles | Supported |

| DLT-META UI | Uses Databricks Lakehouse DLT-META App |

How much does it cost ?

DLT-META does not have any direct cost associated with it other than the cost to run the Databricks Lakeflow Declarative Pipelines on your environment.The overall cost will be determined primarily by the [Databricks Lakeflow Declarative Pipelines Pricing] (https://www.databricks.com/product/pricing/lakeflow-declarative-pipelines)

More questions

Refer to the FAQ

Getting Started

Refer to the Getting Started guide

Project Support

Please note that all projects in the databrickslabs github account are provided for your exploration only, and are not formally supported by Databricks with Service Level Agreements (SLAs). They are provided AS-IS and we do not make any guarantees of any kind. Please do not submit a support ticket relating to any issues arising from the use of these projects.

Any issues discovered through the use of this project should be filed as GitHub Issues on the Repo. They will be reviewed as time permits, but there are no formal SLAs for support.

Contributing

See our CONTRIBUTING for more details.