DAIS DEMO

DAIS 2023 DEMO:

DAIS 2023 Session Recording

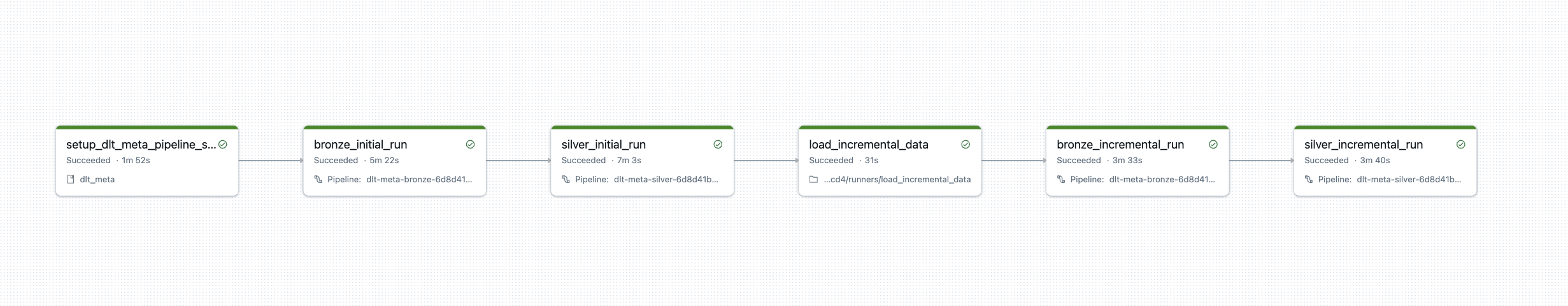

This demo showcases DLT-META’s capabilities of creating Bronze and Silver Lakeflow Declarative pipeline with initial and incremental mode automatically.

- Customer and Transactions feeds for initial load

- Adds new feeds Product and Stores to existing Bronze and Silver Lakeflow Declarative pipeline with metadata changes.

- Runs Bronze and Silver Lakeflow Declarative Pipeline for incremental load for CDC events

Steps to launch DAIS demo in your Databricks workspace:

Launch Command Prompt

Install Databricks CLI

- Once you install Databricks CLI, authenticate your current machine to a Databricks Workspace:

databricks auth login --host WORKSPACE_HOSTInstall Python package requirements:

# Core requirements pip install "PyYAML>=6.0" setuptools databricks-sdk # Development requirements pip install flake8==6.0 delta-spark==3.0.0 pytest>=7.0.0 coverage>=7.0.0 pyspark==3.5.5Clone dlt-meta:

git clone https://github.com/databrickslabs/dlt-meta.gitNavigate to project directory:

cd dlt-metaSet python environment variable into terminal

dlt_meta_home=$(pwd)export PYTHONPATH=$dlt_meta_homeRun the command:

python demo/launch_dais_demo.py --uc_catalog_name=<<uc catalog name>> --cloud_provider_name=<<>>- uc_catalog_name : unit catalog name

- cloud_provider_name : aws or azure or gcp

- you can provide

--profile=databricks_profile namein case you already have databricks cli otherwise command prompt will ask host and token.