Append FLOW Autoloader Demo

Append FLOW Autoloader Demo:

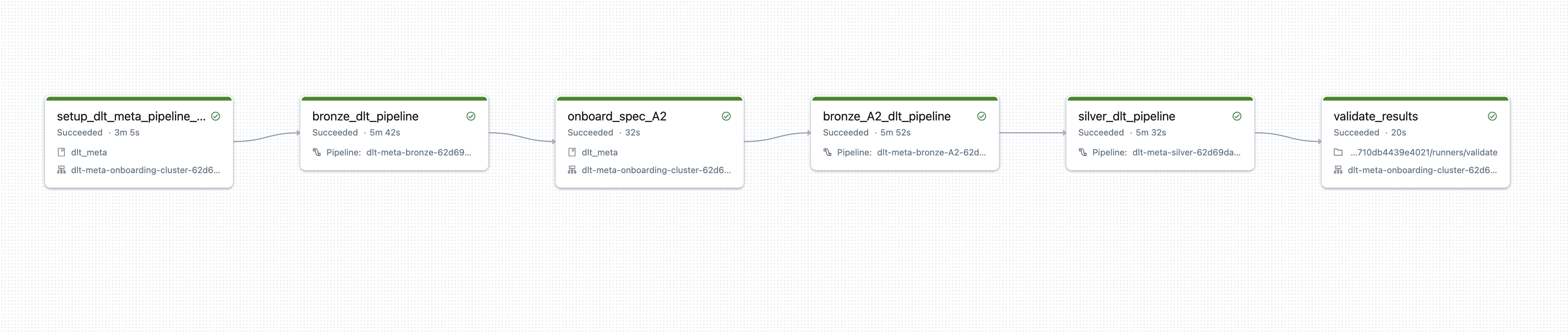

This demo will perform following tasks:

- Read from different source paths using autoloader and write to same target using dlt.append_flow API

- Read from different delta tables and write to same silver table using append_flow API

- Add file_name and file_path to target bronze table for autoloader source using File metadata column

Append flow with autoloader

Launch Command Prompt

Install Databricks CLI

- Once you install Databricks CLI, authenticate your current machine to a Databricks Workspace:

databricks auth login --host WORKSPACE_HOSTInstall Python package requirements:

# Core requirements pip install "PyYAML>=6.0" setuptools databricks-sdk # Development requirements pip install flake8==6.0 delta-spark==3.0.0 pytest>=7.0.0 coverage>=7.0.0 pyspark==3.5.5Clone dlt-meta:

git clone https://github.com/databrickslabs/dlt-meta.gitNavigate to project directory:

cd dlt-metaSet python environment variable into terminal

dlt_meta_home=$(pwd)export PYTHONPATH=$dlt_meta_homeRun the command:

python demo/launch_af_cloudfiles_demo.py --cloud_provider_name=aws --dbr_version=15.3.x-scala2.12 --dbfs_path=dbfs:/tmp/DLT-META/demo/ --uc_catalog_name=dlt_meta_uc

- cloud_provider_name : aws or azure or gcp

- db_version : Databricks Runtime Version

- dbfs_path : Path on your Databricks workspace where demo will be copied for launching DLT-META Pipelines

- uc_catalog_name: Unity catalog name

- you can provide

--profile=databricks_profile namein case you already have databricks cli otherwise command prompt will ask host and token